fuel-dev team mailing list archive

-

fuel-dev team

fuel-dev team

-

Mailing list archive

-

Message #01200

Re: nova hypervisor-list does not lists compute nodes

Based on the result of nova service-list, you have no computes, you should

get a result like:

+------------------+--------+----------+---------+-------+----------------------------+-----------------+

| Binary | Host | Zone | Status | State | Updated_at

| Disabled Reason |

+------------------+--------+----------+---------+-------+----------------------------+-----------------+

| nova-conductor | node-1 | internal | enabled | up |

2014-06-20T22:26:57.000000 | - |

| nova-cert | node-1 | internal | enabled | up |

2014-06-20T22:26:57.000000 | - |

| nova-consoleauth | node-1 | internal | enabled | up |

2014-06-20T22:26:57.000000 | - |

| nova-scheduler | node-1 | internal | enabled | up |

2014-06-20T22:26:57.000000 | - |

| nova-compute | node-2 | nova | enabled | up |

2014-06-20T22:26:57.000000 | - |

+------------------+--------+----------+---------+-------+----------------------------+-----------------+

since there is a nova compute service, then you can expect nova

hypervisor-list to work as well

root@node-1:~# nova hypervisor-list

+----+---------------------+

| ID | Hypervisor hostname |

+----+---------------------+

| 1 | node-2 |

+----+---------------------+

So you need to trouble shoot why the compute node can't connect to the

controller's rabbit process. You can start by trying to ping the

controllers management IP.

On Thu, Jun 19, 2014 at 3:26 PM, Mike Scherbakov <mscherbakov@xxxxxxxxxxxx>

wrote:

> +1 to Andrey.

>

> One side note - please try to not to post emails with pictures. It's

> mailing list and it has its own rules, sorry, they were invented a long

> time ago for a reason - it's hard to read and some clients do show it

> right. Same applies to different fonts and colors. I've also removed

> mailing list in brocade, as external users can't post messages there, and

> it replies back all the time with delivery failure.

>

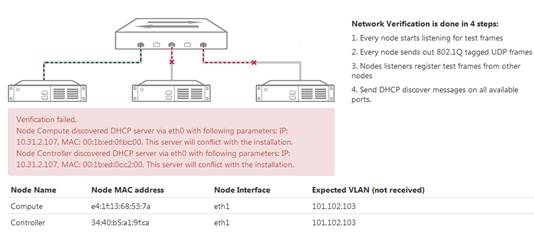

> Regarding the issue, please see [1] and [2] in Fuel user guide to address

> it, if you still want to run on hardware. It's 95% that you've not

> configured your switch correctly.

>

> [1]

> http://docs.mirantis.com/openstack/fuel/fuel-5.0/user-guide.html?highlight=network%20verification#verify-networks

> [2]

> http://docs.mirantis.com/openstack/fuel/fuel-5.0/pre-install-guide.html#calculating-network

>

> Thank you,

>

>

> On Fri, Jun 20, 2014 at 2:18 AM, Andrey Danin <adanin@xxxxxxxxxxxx> wrote:

>

>> Maybe you should try our VirtualBox scripts first? You can just run them,

>> wait until they finished, then create a non-HA Neutron GRE cluster with one

>> controller, one compute, and one cinder nodes, and deploy the cluster

>> without any changes at the Settings and Networks tabs. It should work fine.

>> In this case you will get an etalon environment to compare to your dev

>> environment with real hardware.

>>

>> https://software.mirantis.com/quick-start/

>>

>>

>> On Thu, Jun 19, 2014 at 12:14 PM, Gandhirajan Mariappan (CW) <

>> gmariapp@xxxxxxxxxxx> wrote:

>>

>>> Hi Mike,

>>>

>>>

>>>

>>> Verify network is getting failed with below reasons-

>>>

>>>

>>>

>>>

>>>

>>> *root@node-5:~# nova-manage service list*

>>>

>>> *Binary Host Zone

>>> Status State Updated_At*

>>>

>>> *nova-conductor node-6 internal

>>> enabled :-) 2014-06-19 08:14:08*

>>>

>>> *nova-consoleauth node-6 internal

>>> enabled :-) 2014-06-19 08:13:59*

>>>

>>> *nova-cert node-6 internal

>>> enabled :-) 2014-06-19 08:14:00*

>>>

>>> *nova-scheduler node-6 internal

>>> enabled :-) 2014-06-19 08:14:01*

>>>

>>>

>>>

>>> Thanks and Regards,

>>>

>>> Gandhi Rajan

>>>

>>>

>>>

>>> *From:* Mike Scherbakov [mailto:mscherbakov@xxxxxxxxxxxx]

>>> *Sent:* Thursday, June 19, 2014 1:02 PM

>>>

>>> *To:* Gandhirajan Mariappan (CW)

>>> *Cc:* Miroslav Anashkin; fuel-dev@xxxxxxxxxxxxxxxxxxx; Prakash

>>> Kaligotla; DL-GRP-ENG-SQA-Open Stack

>>> *Subject:* Re: [Fuel-dev] nova hypervisor-list does not lists compute

>>> nodes

>>>

>>>

>>>

>>> You don't need to tweak config. Let's figure out why it's broken from

>>> the very beginning.

>>>

>>> First of all, let me know if Verify Networks feature doesn't show any

>>> errors.

>>>

>>>

>>>

>>> Then, try to issue "nova-manage service list" instead of using nova api.

>>>

>>>

>>>

>>> Thanks,

>>>

>>>

>>>

>>> On Thu, Jun 19, 2014 at 11:27 AM, Gandhirajan Mariappan (CW) <

>>> gmariapp@xxxxxxxxxxx> wrote:

>>>

>>> Hi Mike,

>>>

>>>

>>>

>>> We are using Fuel version 5.0.

>>>

>>> Initially, without changing any configuration, we tried “nova

>>> hypervisor-list”, but it didn’t list anything.

>>>

>>> So we tried changing the below lines, but still it didn’t list compute

>>> node while executing “nova hypervisor-list”

>>>

>>>

>>>

>>> *Changes Made:*

>>>

>>> We have replaced all the references of 192.168.0.3 to 10.24.41.3 in

>>> Compute node -> /etc/nova/nova.conf file.

>>>

>>> Also modified Compute node -> /etc/nova/nova-compute.conf with the below

>>> lines -

>>>

>>>

>>>

>>> *[DEFAULT]*

>>>

>>> *compute_driver=libvirt.LibvirtDriver*

>>>

>>> *#sql_connection=mysql://nova:gfW4v98X@10.24.41.3/nova?charset=utf8

>>> <http://nova:gfW4v98X@10.24.41.3/nova?charset=utf8>*

>>>

>>> *#sql_connection=mysql://nova:gfW4v98X@10.24.41.3/nova?read_timeout=60

>>> <http://nova:gfW4v98X@10.24.41.3/nova?read_timeout=60>*

>>>

>>> *sql_connection=mysql://nova:gfW4v98X@10.24.41.3/nova?read_timeout=60

>>> <http://nova:gfW4v98X@10.24.41.3/nova?read_timeout=60>*

>>>

>>> *#neutron_url=http://10.24.41.3:9696 <http://10.24.41.3:9696>*

>>>

>>> *neutron_url=http://10.24.41.3:9696 <http://10.24.41.3:9696>*

>>>

>>> *neutron_admin_username=neutron*

>>>

>>> *#neutron_admin_password=password*

>>>

>>> *neutron_admin_password=TdvGnCTq*

>>>

>>> *neutron_admin_tenant_name=services*

>>>

>>> *neutron_region_name=RegionOne*

>>>

>>> *#neutron_admin_auth_url=http://10.24.41.3:35357/v2.0

>>> <http://10.24.41.3:35357/v2.0>*

>>>

>>> *neutron_admin_auth_url=http://10.24.41.3:35357/v2.0

>>> <http://10.24.41.3:35357/v2.0>*

>>>

>>> *neutron_auth_strategy=keystone*

>>>

>>> *security_group_api=neutron*

>>>

>>> *debug=True*

>>>

>>> *verbose=True*

>>>

>>> *connection_type=libvirt*

>>>

>>> *network_api_class=nova.network.neutronv2.api.API*

>>>

>>> *libvirt_use_virtio_for_bridges=True*

>>>

>>> *neutron_default_tenant_id=default*

>>>

>>> *rabbit_userid=nova*

>>>

>>> *rabbit_password=WENFYGnk*

>>>

>>>

>>>

>>> *[libvirt]*

>>>

>>> *virt_type=kvm*

>>>

>>> *compute_driver=libvirt.LibvirtDriver*

>>>

>>> *libvirt_ovs_bridge=br-int*

>>>

>>> *libvirt_vif_type=ethernet*

>>>

>>> *libvirt_vif_driver=nova.virt.libvirt.vif.NeutronLinuxBridgeVIFDriver*

>>>

>>>

>>>

>>> If possible, please share us the Compute node -> nova. Conf and

>>> nova-compute.conf and Controller node -> nova.conf files, so that we will

>>> also cross check the configurations.

>>>

>>>

>>>

>>> Thanks and Regards,

>>>

>>> Gandhi Rajan

>>>

>>>

>>>

>>> *From:* Mike Scherbakov [mailto:mscherbakov@xxxxxxxxxxxx]

>>> *Sent:* Thursday, June 19, 2014 12:49 PM

>>> *To:* Gandhirajan Mariappan (CW)

>>> *Cc:* Miroslav Anashkin; fuel-dev@xxxxxxxxxxxxxxxxxxx; Prakash

>>> Kaligotla; DL-GRP-ENG-SQA-Open Stack

>>> *Subject:* Re: [Fuel-dev] nova hypervisor-list does not lists compute

>>> nodes

>>>

>>>

>>>

>>> Hi Gandhirajan,

>>>

>>> what version of Fuel do you use?

>>>

>>>

>>>

>>> For 5.0, I've just tried, it works correct (with Neutron enabled

>>> deployment, though I don't think it matters):

>>>

>>> [root@node-2 ~]# nova hypervisor-list

>>>

>>> +----+---------------------+

>>>

>>> | ID | Hypervisor hostname |

>>>

>>> +----+---------------------+

>>>

>>> | 1 | node-1.domain.tld |

>>>

>>> +----+---------------------+

>>>

>>>

>>>

>>> Did you make any modifications to the configuration?

>>>

>>>

>>>

>>> On Wed, Jun 18, 2014 at 4:56 PM, Gandhirajan Mariappan (CW) <

>>> gmariapp@xxxxxxxxxxx> wrote:

>>>

>>> Gentle Reminder!

>>>

>>> Kindly help us in listing compute node on executing “nova

>>> hypervisor-list” command. We have only one compute node and that compute

>>> node should get listed.

>>>

>>>

>>>

>>> Thanks and Regards,

>>>

>>> Gandhi Rajan

>>>

>>>

>>>

>>> *From:* Gandhirajan Mariappan (CW)

>>> *Sent:* Tuesday, June 17, 2014 3:12 PM

>>> *To:* 'Miroslav Anashkin'

>>>

>>>

>>> *Cc:* fuel-dev@xxxxxxxxxxxxxxxxxxx; DL-GRP-ENG-SQA-Open Stack; Prakash

>>> Kaligotla; eshumakher@xxxxxxxxxxxx; adanin@xxxxxxxxxxxx;

>>> izinovik@xxxxxxxxxxxx

>>>

>>> *Subject:* RE: nova hypervisor-list does not lists compute nodes

>>>

>>>

>>>

>>> Hi Miroslav,

>>>

>>>

>>>

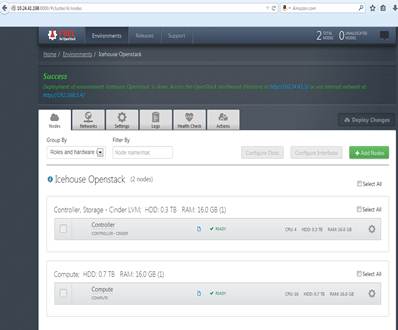

>>> Compute node deployment is successful. Please find the below screenshot.

>>> “nova hypervisor-list” is empty because nova-compute.conf file is not

>>> configured automatically and only the below lines was available.

>>>

>>>

>>>

>>> *[DEFAULT]*

>>>

>>> *compute_driver=libvirt.LibvirtDriver*

>>>

>>> *#sql_connection=mysql://nova:gfW4v98X@192.168.0.3/nova?charset=utf8

>>> <http://nova:gfW4v98X@192.168.0.3/nova?charset=utf8>*

>>>

>>> *[libvirt]*

>>>

>>> *virt_type=kvm*

>>>

>>>

>>>

>>>

>>>

>>>

>>>

>>> Our understanding is /etc/nova/nova-compute.conf file is required at

>>> Compute node only and not at Controller node. Compute node will use this

>>> nova-compute.conf file to intimate the neutron present in Controller node

>>> to assign IP address and so on. I have already provided the

>>> configurations/lines added in nova-compute.conf file at the below mail

>>> conversation.

>>>

>>>

>>>

>>> Kindly let us know why the hypervisor-list is not listing the compute

>>> nodes and what are the configurations we have to make so that

>>> hypervisor-list will list the compute nodes.

>>>

>>>

>>>

>>> Thanks and Regards,

>>>

>>> Gandhi Rajan

>>>

>>>

>>>

>>> *From:* Miroslav Anashkin [mailto:manashkin@xxxxxxxxxxxx

>>> <manashkin@xxxxxxxxxxxx>]

>>> *Sent:* Tuesday, June 17, 2014 1:00 AM

>>> *To:* Gandhirajan Mariappan (CW)

>>> *Cc:* fuel-dev@xxxxxxxxxxxxxxxxxxx; DL-GRP-ENG-SQA-Open Stack; Prakash

>>> Kaligotla; eshumakher@xxxxxxxxxxxx; adanin@xxxxxxxxxxxx;

>>> izinovik@xxxxxxxxxxxx

>>> *Subject:* Re: nova hypervisor-list does not lists compute nodes

>>>

>>>

>>>

>>> Greetings Gandhi,

>>>

>>> Have Fuel deployed your compute node successfully? It should create all

>>> the configuration files automatically.

>>>

>>> BTW, there is no /etc/nova/nova-compute.conf in OpenStack. There is

>>> /etc/nova/nova.conf on both, controller and compute nodes.

>>>

>>> Kind regards,

>>>

>>> Miroslav

>>>

>>>

>>>

>>> On Mon, Jun 16, 2014 at 4:09 PM, Gandhirajan Mariappan (CW) <

>>> gmariapp@xxxxxxxxxxx> wrote:

>>>

>>> Hi Fuel Dev,

>>>

>>>

>>>

>>> We are facing issue in listing hypervisor-list in Controller node.

>>> Kindly let us know why nova hypervisor-list does not list compute node.

>>> Also, it would be great if you share us the sample nova-compute.conf file.

>>> Details are provided below -

>>>

>>>

>>>

>>> *Controller Node : 10.24.41.3 <http://10.24.41.3>:*

>>>

>>> *root@node-6:~# nova hypervisor-list*

>>>

>>> *+----+---------------------+*

>>>

>>> *| ID | Hypervisor hostname |*

>>>

>>> *+----+---------------------+*

>>>

>>> *+----+---------------------+*

>>>

>>>

>>>

>>> Since nova hypervisor-list is empty, we have added below code in *Compute

>>> Node-10.24.41.2. *

>>>

>>> */etc/nova/nova-compute.conf file*

>>>

>>> *[DEFAULT]*

>>>

>>> *compute_driver=libvirt.LibvirtDriver*

>>>

>>> *#sql_connection=mysql://nova:gfW4v98X@192.168.0.3/nova?charset=utf8

>>> <http://nova:gfW4v98X@192.168.0.3/nova?charset=utf8>*

>>>

>>> *sql_connection=mysql://nova:gfW4v98X@192.168.0.3/nova?read_timeout=60

>>> <http://nova:gfW4v98X@192.168.0.3/nova?read_timeout=60>*

>>>

>>> *neutron_url=http://192.168.0.3:9696 <http://192.168.0.3:9696>*

>>>

>>> *neutron_admin_username=neutron*

>>>

>>> *#neutron_admin_password=password*

>>>

>>> *neutron_admin_password=TdvGnCTq*

>>>

>>> *neutron_admin_tenant_name=services*

>>>

>>> *neutron_region_name=RegionOne*

>>>

>>> *neutron_admin_auth_url=http://192.168.0.3:35357/v2.0

>>> <http://192.168.0.3:35357/v2.0>*

>>>

>>> *neutron_auth_strategy=keystone*

>>>

>>> *security_group_api=neutron*

>>>

>>> *debug=True*

>>>

>>> *verbose=True*

>>>

>>> *connection_type=libvirt*

>>>

>>> *network_api_class=nova.network.neutronv2.api.API*

>>>

>>> *libvirt_use_virtio_for_bridges=True*

>>>

>>> *neutron_default_tenant_id=default*

>>>

>>> *rabbit_userid=nova*

>>>

>>> *rabbit_password=WENFYGnk*

>>>

>>>

>>>

>>> *[libvirt]*

>>>

>>> *virt_type=kvm*

>>>

>>> *compute_driver=libvirt.LibvirtDriver*

>>>

>>> *libvirt_ovs_bridge=br-int*

>>>

>>> *libvirt_vif_type=ethernet*

>>>

>>> *libvirt_vif_driver=nova.virt.libvirt.vif.NeutronLinuxBridgeVIFDriver*

>>>

>>>

>>>

>>>

>>>

>>> *Logs from Compute node : 10.24.41.2*

>>>

>>> */var/log/nova/nova-compute.log*

>>>

>>> *2014-06-12 06:26:11.250 14211 INFO nova.openstack.common.periodic_task

>>> [-] Skipping periodic task _periodic_update_dns because its interval is

>>> negative*

>>>

>>> *2014-06-12 06:26:11.368 14211 INFO nova.virt.driver [-] Loading compute

>>> driver 'libvirt.LibvirtDriver'*

>>>

>>> *2014-06-12 06:26:11.420 14211 ERROR oslo.messaging._drivers.impl_rabbit

>>> [req-6385ba52-b54c-4b61-b211-c49ce9203819 ] AMQP server on localhost:5672

>>> is unreachable: [Errno 111] ECONNREFUSED. Trying again in 1 seconds.*

>>>

>>> *2014-06-12 06:26:12.422 14211 INFO oslo.messaging._drivers.impl_rabbit

>>> [req-6385ba52-b54c-4b61-b211-c49ce9203819 ] Reconnecting to AMQP server on

>>> localhost:5672*

>>>

>>> *2014-06-12 06:26:12.423 14211 INFO oslo.messaging._drivers.impl_rabbit

>>> [req-6385ba52-b54c-4b61-b211-c49ce9203819 ] Delaying reconnect for 1.0

>>> seconds...*

>>>

>>> *2014-06-12 06:26:13.435 14211 ERROR oslo.messaging._drivers.impl_rabbit

>>> [req-6385ba52-b54c-4b61-b211-c49ce9203819 ] AMQP server on localhost:5672

>>> is unreachable: [Errno 111] ECONNREFUSED. Trying again in 3 seconds.*

>>>

>>> *2014-06-12 06:26:16.436 14211 INFO oslo.messaging._drivers.impl_rabbit

>>> [req-6385ba52-b54c-4b61-b211-c49ce9203819 ] Reconnecting to AMQP server on

>>> localhost:5672*

>>>

>>> *2014-06-12 06:26:16.436 14211 INFO oslo.messaging._drivers.impl_rabbit

>>> [req-6385ba52-b54c-4b61-b211-c49ce9203819 ] Delaying reconnect for 1.0

>>> seconds...*

>>>

>>> *2014-06-12 06:26:17.449 14211 ERROR oslo.messaging._drivers.impl_rabbit

>>> [req-6385ba52-b54c-4b61-b211-c49ce9203819 ] AMQP server on localhost:5672

>>> is unreachable: [Errno 111] ECONNREFUSED. Trying again in 5 seconds.*

>>>

>>> *2014-06-12 06:26:22.452 14211 <22.452%2014211> INFO

>>> oslo.messaging._drivers.impl_rabbit

>>> [req-6385ba52-b54c-4b61-b211-c49ce9203819 ] Reconnecting to AMQP server on

>>> localhost:5672*

>>>

>>> *2014-06-12 06:26:22.453 14211 <22.453%2014211> INFO

>>> oslo.messaging._drivers.impl_rabbit

>>> [req-6385ba52-b54c-4b61-b211-c49ce9203819 ] Delaying reconnect for 1.0

>>> seconds...*

>>>

>>> *2014-06-12 06:26:23.462 14211 <23.462%2014211> ERROR

>>> oslo.messaging._drivers.impl_rabbit

>>> [req-6385ba52-b54c-4b61-b211-c49ce9203819 ] AMQP server on localhost:5672

>>> is unreachable: [Errno 111] ECONNREFUSED. Trying again in 7 seconds.*

>>>

>>> *2014-06-12 06:26:30.464 14211 <30.464%2014211> INFO

>>> oslo.messaging._drivers.impl_rabbit

>>> [req-6385ba52-b54c-4b61-b211-c49ce9203819 ] Reconnecting to AMQP server on

>>> localhost:5672*

>>>

>>> *2014-06-12 06:26:30.465 14211 <30.465%2014211> INFO

>>> oslo.messaging._drivers.impl_rabbit

>>> [req-6385ba52-b54c-4b61-b211-c49ce9203819 ] Delaying reconnect for 1.0

>>> seconds...*

>>>

>>> *2014-06-12 06:26:31.477 14211 <31.477%2014211> ERROR

>>> oslo.messaging._drivers.impl_rabbit

>>> [req-6385ba52-b54c-4b61-b211-c49ce9203819 ] AMQP server on localhost:5672

>>> is unreachable: [Errno 111] ECONNREFUSED. Trying again in 9 seconds.*

>>>

>>> *2014-06-12 06:26:40.481 14211 <40.481%2014211> INFO

>>> oslo.messaging._drivers.impl_rabbit

>>> [req-6385ba52-b54c-4b61-b211-c49ce9203819 ] Reconnecting to AMQP server on

>>> localhost:5672*

>>>

>>> *2014-06-12 06:26:40.482 14211 <40.482%2014211> INFO

>>> oslo.messaging._drivers.impl_rabbit

>>> [req-6385ba52-b54c-4b61-b211-c49ce9203819 ] Delaying reconnect for 1.0

>>> seconds...*

>>>

>>> *2014-06-12 06:26:41.493 14211 <41.493%2014211> ERROR

>>> oslo.messaging._drivers.impl_rabbit

>>> [req-6385ba52-b54c-4b61-b211-c49ce9203819 ] AMQP server on localhost:5672

>>> is unreachable: [Errno 111] ECONNREFUSED. Trying again in 11 seconds.*

>>>

>>> *2014-06-12 06:26:52.500 14211 INFO oslo.messaging._drivers.impl_rabbit

>>> [req-6385ba52-b54c-4b61-b211-c49ce9203819 ] Reconnecting to AMQP server on

>>> localhost:5672*

>>>

>>> *2014-06-12 06:26:52.501 14211 INFO oslo.messaging._drivers.impl_rabbit

>>> [req-6385ba52-b54c-4b61-b211-c49ce9203819 ] Delaying reconnect for 1.0

>>> seconds...*

>>>

>>>

>>>

>>>

>>>

>>> */var/log/nova/nova.log** – is empty*

>>>

>>>

>>>

>>> */var/log/nova/nova-manage.log*

>>>

>>> *2014-06-12 06:25:51.723 13898 <51.723%2013898> INFO

>>> migrate.versioning.api [-] 215 -> 216...*

>>>

>>> *2014-06-12 06:26:05.989 13898 INFO migrate.versioning.api [-] done*

>>>

>>> *2014-06-12 06:26:05.989 13898 INFO migrate.versioning.api [-] 216 ->

>>> 217...*

>>>

>>> *2014-06-12 06:26:06.052 13898 INFO migrate.versioning.api [-] done*

>>>

>>> *2014-06-12 06:26:06.052 13898 INFO migrate.versioning.api [-] 217 ->

>>> 218...*

>>>

>>> *2014-06-12 06:26:06.115 13898 INFO migrate.versioning.api [-] done*

>>>

>>> *2014-06-12 06:26:06.115 13898 INFO migrate.versioning.api [-] 218 ->

>>> 219...*

>>>

>>> *2014-06-12 06:26:06.183 13898 INFO migrate.versioning.api [-] done*

>>>

>>> *2014-06-12 06:26:06.184 13898 INFO migrate.versioning.api [-] 219 ->

>>> 220...*

>>>

>>> *2014-06-12 06:26:06.246 13898 INFO migrate.versioning.api [-] done*

>>>

>>> *2014-06-12 06:26:06.247 13898 INFO migrate.versioning.api [-] 220 ->

>>> 221...*

>>>

>>> *2014-06-12 06:26:06.309 13898 INFO migrate.versioning.api [-] done*

>>>

>>> *2014-06-12 06:26:06.309 13898 INFO migrate.versioning.api [-] 221 ->

>>> 222...*

>>>

>>> *2014-06-12 06:26:06.372 13898 INFO migrate.versioning.api [-] done*

>>>

>>> *2014-06-12 06:26:06.372 13898 INFO migrate.versioning.api [-] 222 ->

>>> 223...*

>>>

>>> *2014-06-12 06:26:06.435 13898 INFO migrate.versioning.api [-] done*

>>>

>>> *2014-06-12 06:26:06.436 13898 INFO migrate.versioning.api [-] 223 ->

>>> 224...*

>>>

>>> *2014-06-12 06:26:06.498 13898 INFO migrate.versioning.api [-] done*

>>>

>>> *2014-06-12 06:26:06.498 13898 INFO migrate.versioning.api [-] 224 ->

>>> 225...*

>>>

>>> *2014-06-12 06:26:06.561 13898 INFO migrate.versioning.api [-] done*

>>>

>>> *2014-06-12 06:26:06.561 13898 INFO migrate.versioning.api [-] 225 ->

>>> 226...*

>>>

>>> *2014-06-12 06:26:06.623 13898 INFO migrate.versioning.api [-] done*

>>>

>>> *2014-06-12 06:26:06.624 13898 INFO migrate.versioning.api [-] 226 ->

>>> 227...*

>>>

>>> *2014-06-12 06:26:06.692 13898 INFO migrate.versioning.api [-] done*

>>>

>>> *2014-06-12 06:26:06.692 13898 INFO migrate.versioning.api [-] 227 ->

>>> 228...*

>>>

>>> *2014-06-12 06:26:06.886 13898 INFO migrate.versioning.api [-] done*

>>>

>>> *2014-06-12 06:26:06.887 13898 INFO migrate.versioning.api [-] 228 ->

>>> 229...*

>>>

>>> *2014-06-12 06:26:07.081 13898 INFO migrate.versioning.api [-] done*

>>>

>>> *2014-06-12 06:26:07.081 13898 INFO migrate.versioning.api [-] 229 ->

>>> 230...*

>>>

>>> *2014-06-12 06:26:07.401 13898 INFO migrate.versioning.api [-] done*

>>>

>>> *2014-06-12 06:26:07.401 13898 INFO migrate.versioning.api [-] 230 ->

>>> 231...*

>>>

>>> *2014-06-12 06:26:07.606 13898 INFO migrate.versioning.api [-] done*

>>>

>>> *2014-06-12 06:26:07.607 13898 INFO migrate.versioning.api [-] 231 ->

>>> 232...*

>>>

>>> *2014-06-12 06:26:08.178 13898 INFO migrate.versioning.api [-] done*

>>>

>>> *2014-06-12 06:26:08.179 13898 INFO migrate.versioning.api [-] 232 ->

>>> 233...*

>>>

>>> *2014-06-12 06:26:08.515 13898 INFO migrate.versioning.api [-] done*

>>>

>>>

>>>

>>> Thanks and Regards,

>>>

>>> Gandhi Rajan

>>>

>>>

>>>

>>>

>>> --

>>>

>>>

>>>

>>> *Kind RegardsMiroslav Anashkin**L2 support engineer,*

>>> * Mirantis Inc.*

>>> *+7(495)640-4944 <%2B7%28495%29640-4944> (office receptionist)*

>>> * +1(650)587-5200 <%2B1%28650%29587-5200> (office receptionist, call

>>> from US)*

>>> *35b, Bld. 3, Vorontsovskaya St.*

>>> * Moscow, Russia, 109147.*

>>>

>>> www.mirantis.com

>>>

>>> manashkin@xxxxxxxxxxxx

>>>

>>>

>>> --

>>> Mailing list: https://launchpad.net/~fuel-dev

>>> Post to : fuel-dev@xxxxxxxxxxxxxxxxxxx

>>> Unsubscribe : https://launchpad.net/~fuel-dev

>>> More help : https://help.launchpad.net/ListHelp

>>>

>>>

>>>

>>>

>>>

>>> --

>>> Mike Scherbakov

>>> #mihgen

>>>

>>>

>>>

>>>

>>>

>>> --

>>> Mike Scherbakov

>>> #mihgen

>>>

>>> --

>>> Mailing list: https://launchpad.net/~fuel-dev

>>> Post to : fuel-dev@xxxxxxxxxxxxxxxxxxx

>>> Unsubscribe : https://launchpad.net/~fuel-dev

>>> More help : https://help.launchpad.net/ListHelp

>>>

>>>

>>

>>

>> --

>> Andrey Danin

>> adanin@xxxxxxxxxxxx

>> skype: gcon.monolake

>>

>

>

>

> --

> Mike Scherbakov

> #mihgen

>

>

> --

> Mailing list: https://launchpad.net/~fuel-dev

> Post to : fuel-dev@xxxxxxxxxxxxxxxxxxx

> Unsubscribe : https://launchpad.net/~fuel-dev

> More help : https://help.launchpad.net/ListHelp

>

>

--

Andrew

Mirantis

Ceph community

References

-

nova hypervisor-list does not lists compute nodes

From: Gandhirajan Mariappan (CW), 2014-06-16

-

Re: nova hypervisor-list does not lists compute nodes

From: Miroslav Anashkin, 2014-06-16

-

Re: nova hypervisor-list does not lists compute nodes

From: Gandhirajan Mariappan (CW), 2014-06-17

-

Re: nova hypervisor-list does not lists compute nodes

From: Gandhirajan Mariappan (CW), 2014-06-18

-

Re: nova hypervisor-list does not lists compute nodes

From: Mike Scherbakov, 2014-06-19

-

Re: nova hypervisor-list does not lists compute nodes

From: Gandhirajan Mariappan (CW), 2014-06-19

-

Re: nova hypervisor-list does not lists compute nodes

From: Mike Scherbakov, 2014-06-19

-

Re: nova hypervisor-list does not lists compute nodes

From: Gandhirajan Mariappan (CW), 2014-06-19

-

Re: nova hypervisor-list does not lists compute nodes

From: Andrey Danin, 2014-06-19

-

Re: nova hypervisor-list does not lists compute nodes

From: Mike Scherbakov, 2014-06-19