fuel-dev team mailing list archive

-

fuel-dev team

fuel-dev team

-

Mailing list archive

-

Message #01014

Re: Queries on Open Stack deployment

Greetings Karthi and all,

For #2,

As far as I know, Brocade VSC plugin for OpenStack is a kind of management

interface for Brocade VDX series hardware network switches.

So, to get all network traffic go through hardware switch one must use

bare-metal hardware for every OpenStack node.

Second possible scenario is to install VirtualBox (or other virtualization

platform) to the machine with at least 10 hardware network ports, connected

to hardware VDX switch, configure all virtual network adapters in

VirtualBox as Bridged, and set each bridged adapter to use separate

physical network port.

10 hardware network ports should be enough to install Mirantis OpenStack

master node (1 network port required) and 3 OpenStack nodes (Controller + 2

Computes), with 3 network ports each.

For NIC bonding scenarios 3 extra network ports, bridged to appropriate

extra virtual NICs recommended.

Plus you probably would like to have one more extra network port to use as

ordinary network interface for host OS.

Since Mirantis OpenStack requires at least 2 non-intersecting physical

networks, either connect each network to separate hardware switch or use

single switch with appropriate port groups configured for each physical

network. Every ARP, broadcast and other kinds of network traffic should not

go outside the port group.

Promiscuous traffic should be allowed for each port group, connected to

OpenStack nodes - it is mandatory for Neutron.

No DHCP server must be connected to or visible inside these port groups.

In addition, one have to manually reconfigure deployed OpenStack networking

scheme to use Brocade VSC plugin after OpenStack is installed.

For #3,

Yes, in scope of this certification I recommend not only single but all

nodes to be bare-metal physical server each.

For #3a:

Mirantis OpenStack has hardware discovery feature, based on PXE boot.

To get your hardware node discovered, simply connect it to dedicated

hardware network, (Admin network in Mirantis OpenStack documentation), make

this node PXE bootable from master node and it will be discovered

automatically with all its hardware NICs, HDDs, CPUs etc.

Please note - no other DHCP server except Mirantis OpenStack master node

should exist inside this Admin network segment.

If no particular hardware appear in the properties of the discovered node -

it may mean no drivers available in PXE bootstrap image for particular

hardware.

In such case please make us know of it and we'll do our best to add the

necessary drivers.

For #3b:

There is no differences in configuration of network interfaces, located in

physical servers or on virtual.

Both physical and virtual NICs appear in Mirantis OpenStack Fuel UI the

same way.

But in case one use hardware network switch to connect nodes, one have to

enable/allow proper VLANs for each network port, connected to OpenStack

nodes.

VLAN numbers, allowed on the switch ports should match with ones,

configured for each NIC/network in Mirantis OpenStack Fuel UI.

*Kind Regards*

*Miroslav Anashkin**L2 support engineer**,*

*Mirantis Inc.*

On Wed, Apr 30, 2014 at 6:47 PM, Karthi Palaniappan (CW) <

kpalania@xxxxxxxxxxx> wrote:

> Hi,

>

>

>

> I have few queried in Mirantis openstack deployment. Could you please

> confirm the same?

>

>

>

> 1. MirantisOpenStack-4.1.iso is having Havana stack not Ice House

> stack. Is there any latest ISO which is having Ice House stack?

>

>

>

> 2. Is this the recommended architecture for Mirantis certification

> on Brocade VCS plugin?

>

> I have 4 VMs (fuel master node IP : 10.20.0.2, fuel slave 1 : 10.20.0.217,

> slave 2 : 10.20.0.252 and slave 3 : 10.20.0.198) hosted on a physical

> machine(Ubuntu 12.0.4 LTS running machine), I used virtual box script to

> create these VMs, then I created a new environment with 1 VM as controller

> node and 2 VMs as compute node. If I try to ping between VMs the

> communication will be via OVS bridge instead of Brocade VDX device (L2

> switching device) so I am not sure whether we can test our VCS plugin code

> with this test bed.

>

>

>

> 3. Shall I use physical server as one more compute node managed by

> this fuel master node?

>

> a. If yes, How can I include/discover the physical compute node in

> OpenStack cluster?

>

> b. we are going to use neutron network isolation using OpenVswitch

> and VLANs. Siced I used fuel script It have created 3 virtual NICs. In case

> of physical node how can I go with the interface configuration? Shall I go

> ahead with default interface configuration or modified one?

>

> Note : Physical server’s eth0 is connected to management network.

>

>

>

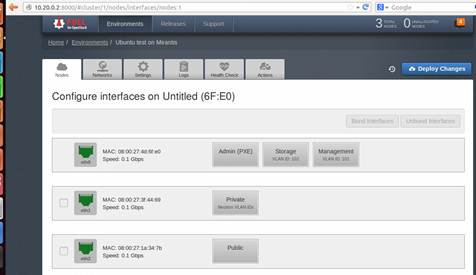

> *Default interface configuration*

>

>

>

>

>

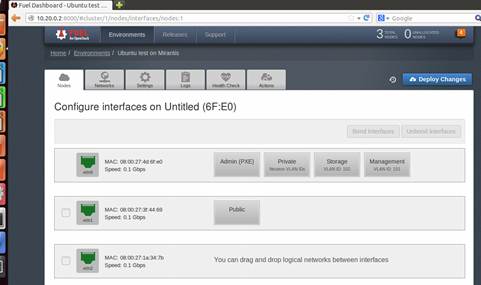

> *Modified interface configuration*

>

> Regards,

>

> Karthi

>

> --

> Mailing list: https://launchpad.net/~fuel-dev

> Post to : fuel-dev@xxxxxxxxxxxxxxxxxxx

> Unsubscribe : https://launchpad.net/~fuel-dev

> More help : https://help.launchpad.net/ListHelp

>

>

--

*Kind Regards*

*Miroslav Anashkin**L2 support engineer**,*

*Mirantis Inc.*

*+7(495)640-4944 (office receptionist)*

*+1(650)587-5200 (office receptionist, call from US)*

*35b, Bld. 3, Vorontsovskaya St.*

*Moscow**, Russia, 109147.*

www.mirantis.com

manashkin@xxxxxxxxxxxx

References